Master Projects & Independent Study projects

|

Short ProjectsThermal Navigation for Aerial Robotics

Supervisors: Kostas Alexis (UNR) Available The goal of this project will be to investigate the potential of thermal navigation for small aerial robotics. Via the integration of a minimal sensor suite that combines thermal vision, inertial sensors, magnetometer we want to examine the potential of deploying an algorithm for the localization of the aerial vehicle against its environment without visible light cameras. This will set the basis for autonomous navigation when the light conditions do not allow the operation of normal cameras and will further give rise to the possibility of night navigation for micro aerial vehicles. Download the Project Description (PDF) |

Old - Accomplished ProjectsDense Mapping and Autonomous MAV Navigation using Time-of-Flight 3D Cameras and Inertial Sensors

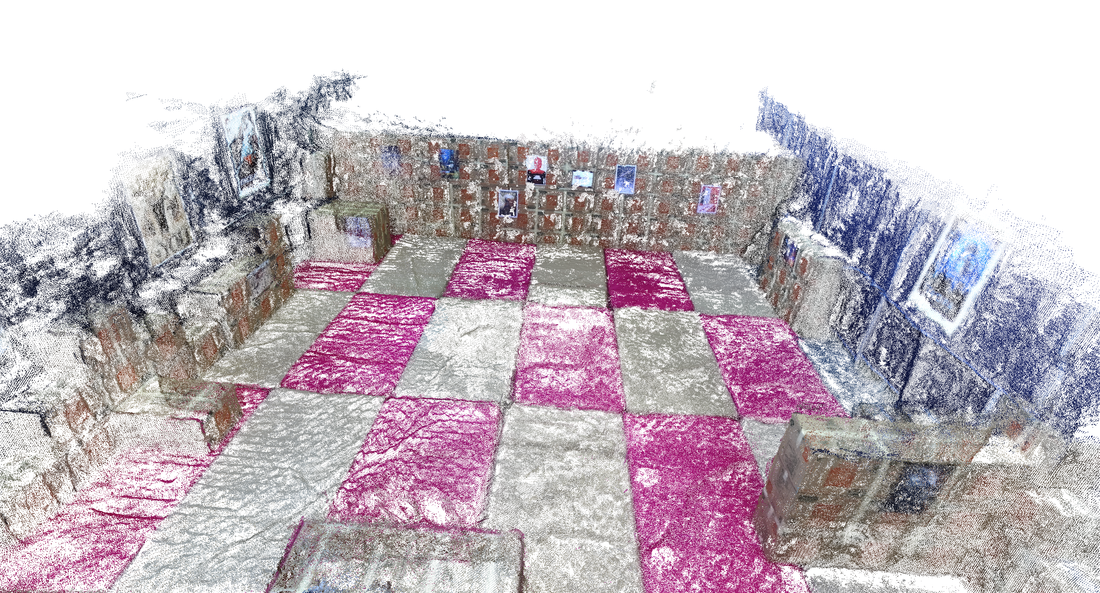

Supervisors: Kostas Alexis (UNR) Assigned. Student: Ashutosh Singandhupe The goal of this project is to develop a hardware and software module that fuses the data of Time–of–Flight 3D sensors, visible light cameras and inertial measurement units in order to a) enable the accurate robot localization and 3D mapping using a Micro Aerial Vehicle (MAV), and b) empower the robot with the capacity of collision–free navigation in previously unknown environments. Download the Project Description (PDF) Aerial Robotic Path Planning for Inspection Operations

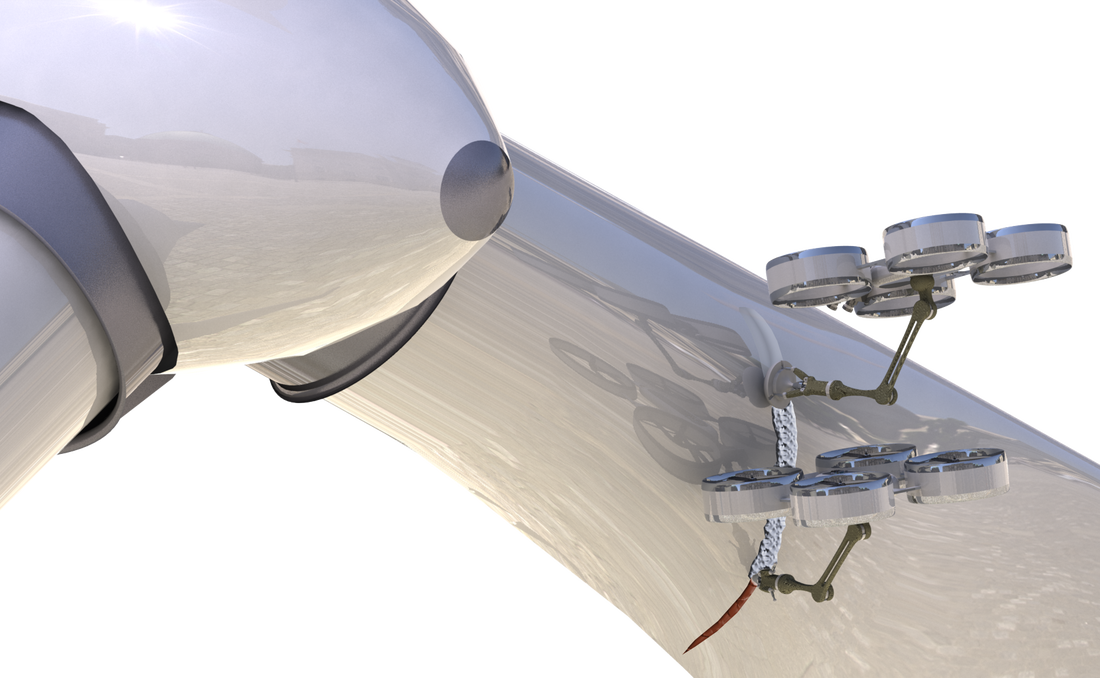

Supervisors: Kostas Alexis (UNR) Assigned. Student: Harinder Singh Toor This project aims to intelligently combine methods for explicit inspection path–planning (when a model of the structure to be inspected exists) with active exploitation of the probabilistic vision–based feedback available in most high–end aerial robots in order to develop an adaptive algorithm capable of “active sensing” for complete, high–fidelity structural inspection operations. By actively closing the perception–navigation loop of the system, the goal is to establish the autonomy levels required to enable autonomous complete 3D inspection in partially known or completely unknown environments. Download the Project Description (PDF) Reconfigurable Multi-agent Autonomous Exploration using Aerial Robots

Supervisors: Kostas Alexis (UNR) Assigned. Student: Sanket Lokhande This project aims to intelligently combine methods for explicit inspection path–planning (when a model of the structure to be inspected exists) with active exploitation of the probabilistic vision–based feedback available in most high–end aerial robots in order to develop an adaptive algorithm capable of “active sensing” for complete, high–fidelity structural inspection operations. By actively closing the perception–navigation loop of the system, the goal is to establish the autonomy levels required to enable autonomous complete 3D inspection in partially known or completely unknown environments. Download the Project Description (PDF) Development of the UNR Flying Arena

Supervisors: Kostas Alexis (UNR), Luis Rodolfo Garcia Garrillo (UNR) Available to multiple students . 2 students already Assigned: Aswathi Sandeep, Alexander Wittmann This project aims to develop the UNR Flying Arena. Located at the High–Bay laboratory, UNR provides large space for testing of unmanned aircrafts. The available infrastructure includes a motion capture system which facilitates ground truth but also enables us to deploy safety mechanisms that can take over the control of our robots in case of emergency. This project aims to develop the relevant ROS–based Framework. Download the Project Description (PDF) |