Semester Projects

The course is developed around challenging research-oriented semester projects. Those that were provided for Fall 2016 are listed below.

|

Project #5: Aerial Robotics for Nuclear Site Characterization

Description: A century of nuclear research, war and accidents created a worldwide legacy of contaminated sites. Massive cleanup of that nuclear complex is underway. Our broad research goal is to addresses means to explore and rad-map nuclear sites by deploying unprecedented, tightly integrated sensing, modeling and planning on small flying robots. Within this project in particular, the goal is to develop multi-modal sensing and mapping capabilities by fusing visual cues with thermal and radiation camera data alongside with inertial sensor readings. Ultimately, the aerial robot should be able to derive 3D maps of its environment that are further annotated with the spatial thermal and radiation distribution. Technically, this will be achieved via the development of a multi-modal localization and mapping pipeline that exploits the different sensing modalities (inertial, visible-light, thermal and radiation camera) in a synchronized and complimentary fashion. Finally, within the project you are expected to demonstrate the autonomous multi-modal mapping capabilities via relevant experiments using a multirotor aerial robot. Research Tasks:

Team:

Collaborators: Nevada Advanced Autonomous Systems Innovation Center - https://www.unr.edu/naasic Budget: $2,000 Indicative Video of the Results of the Team: Project #4: Aerial Robotics for Climate Monitoring and Control

Description: Within that project you are requested to develop an aerial robot capable of environmental monitoring. In particular, an “environmental sensing pod” that integrates visible light and multispectral cameras, GPS receiver, and inertial, atmospheric quality, as well as temperature sensors. Through appropriate sensor fusion, the aerial robot should be able to estimate a consistent 3D terrain/atmospheric map of its environment according to which every spatial point is annotated with atmospheric measurements and the altitude that those took place (or ideally their spatial distribution). To enable advanced operational capacity, a fixed-wing aerial robot should be employed and GPS-based navigation should be automated. Ideally, the aerial robot should be able to also autonomously derive paths that ensure sufficient coverage of environmental sensing data. Research Tasks:

Team:

Budget: $2,000 Indicative Video of the Results of the Team: Project #3: Robots to Study Lake Tahoe!

Description: Water is a nexus of global struggle, and increasing pressure on water resources is driven by large-scale perturbations such as climate change, invasive species, dam development and diversions, pathogen occurrence, nutrient deposition, pollution, toxic chemicals, and increasing and competing human demands. These problems are multidimensional and require integrative, data-driven solutions enabled by environmental data collection at various scales in space and time. Currently, most ecological research that quantifies impacts from perturbations in aquatic ecosystems is based on (i) the collection of single snapshot data in space, or (ii) multiple collections from a single part of an ecosystem over time. Ecosystems are inherently complex; therefore, having access to these relatively coarse and incomplete collections in space and time could result in less than optimal data based solutions. The goal of this project is to design and develop a platform that can be used on the surface of a lake to quantify the water quality changes in the nearshore environment (1-10 m deep). The platform would be autonomous, used to monitor the environment for water quality (temperature, turbidity, oxygen, chl a) at a given depth. Research Tasks:

Team:

Collaborators: Aquatic Ecosystems Analysis Lab: - http://aquaticecosystemslab.org/ , NAASIC Budget: $2,000 Project #2: Autonomous Cars Navigation

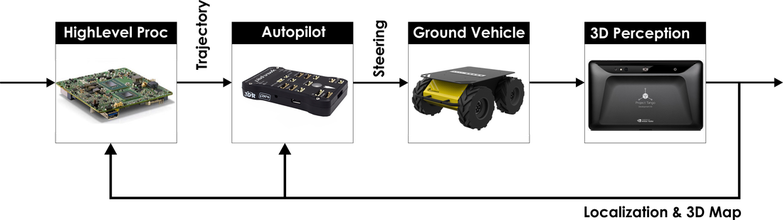

Description: Autonomous transportation systems not only is an ongoing research trend but also a key factor for the progress of our societies, safety of transportation, more green technologies, growth and better quality of life. The goal of this project will be to develop a miniaturized autonomous car able to navigate while mapping its environment, detecting objects in it (other cars) and performing collision-avoidance maneuvers. To achieve this goal, the robot will integrated controlled steering and a perception system that fuses data from cameras, an inertial measurement unit and depth sensors therefore being able to robustly performing the simultaneous localization and mapping task. Finally, a local path path planner will guide the steering control towards collision-free paths. Research Tasks:

Team:

Collaborators: Nevada Center for Applied Research, NAASIC Budget: $2,000

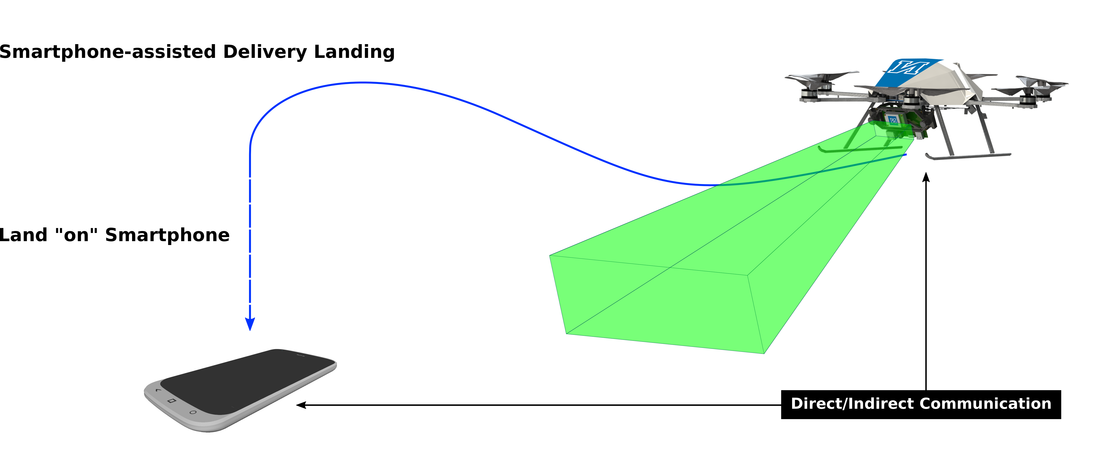

Project #1: Smartphone-assisted Delivery Drone Landing

Description: This project will run in collaboration with Flirtey - the first parcel delivery company conducting real-life operations in the US. The goal is to develop a system that exploits direct/indirect communication between a smartphone and the aerial robot such that delivery landing "on top" of the smartphone becomes possible. Such an approach will enable commercial parcel delivery within challenging and cluttered urban environments. Within the framework of the project, we seek for the most reliable, novel but also technologically feasible solution for the problem at hand. The aerial robot will be able of visual processing and may implement different communication protocols, while the smartphone should be considered "as available" on the market. Research Tasks:

Team:

Collaborators: Flirtey - http://flirtey.com/ Budget: $2,000 |